Hi Simon

It should work fine with CMRx. Protocol is not important.

-

RE: Is CMRx supported?posted in YCCaster

-

New pricing plansposted in YouCORS

We have recently updated our pricing plans. Each plan now includes a dedicated server.

- This removes restrictions on ьщгте зщште and client names, as there are no name conflicts.

- Allows us to show information about connections and events that have occurred on the caster, which helps customers track down errors on their own.

- Dedicated IP address allows the source table to show mount points only for a specific client.

- We also plan to allow customization of the caster.

In 2025 we plan to smoothly transfer all YouCORS users to dedicated servers. The fact that now servers are not shared among users forced us to reconsider prices. Soon we will update them on the landing page.

-

RE: Source table not being filledposted in YCCaster

@josep-bello Hi

Unfortunately no, If you are using http auth, you'll get NTRIP headers in the auth request and you can pass them back. But internally, yccaster will not set source. -

RE: Source table not being filledposted in YCCaster

@josep-bello Hi

Depending on the type of authorization, you can either- set source params in

mountpoints.yml - or return them in the http response

- set source params in

-

RE: Leica GS14posted in YouCORS

@francisco All of the same principles apply to your base. If your rover receives at this place a fixed solution from one base and does not receive from the other base, you need to find an issue with the second base.

-

RE: Leica GS14posted in YouCORS

Hi @francisco

I can give you only general recommendations:Insufficient Number of Satellites: A minimum number of satellites need to be visible to the receiver to calculate a fixed solution. If not enough satellites are visible, the receiver can only provide a float solution

Poor Satellite Geometry: The position of the satellites in relation to each other can affect the accuracy of the positioning solution.Poor geometry can prevent a fixed solution

Obstructions: Physical obstructions like buildings or trees can block the signal from satellites, making it difficult to maintain a fixed solution. It's important to have a clear view of the sky

Tropospheric Delay: The troposphere can cause delays in the GNSS signals, which varies with height above sea level, weather conditions, and satellite elevation angle. This can affect the accuracy of the RTK solution

Internet Quality: A poor Internet between the base station and the rover can prevent a fixed solution.A stable and fast connection is crucial for RTK corrections

To improve the chances of getting a fixed RTK solution, ensure the GNSS receiver has a good sky view, a stable internet connection for RTK corrections, and is not hindered by physical obstructions. If you're in an area with poor visibility, try obtaining a fix in a more open area first and then move slowly to the desired location

-

Planned Maintenance to YouCORS 08.06.2024 - 09.06.2024posted in YouCORS

A big update of the service is planned. The version of the operating system, database and all major applications will be updated.

The server on which the service operates will be moved from Europe to the USA.

The user interface will be updated.

The following features will be added:- Management of users and administrators

- Private casters and servers

We are sure to break something during such a big update. Therefore, the service team will work all weekend to fix all possible bugs and by Monday the service will be ready for normal operation.

If you have any questions, please contact support@hedgehack.com.

We apologize for any inconvenience,

Ilya -

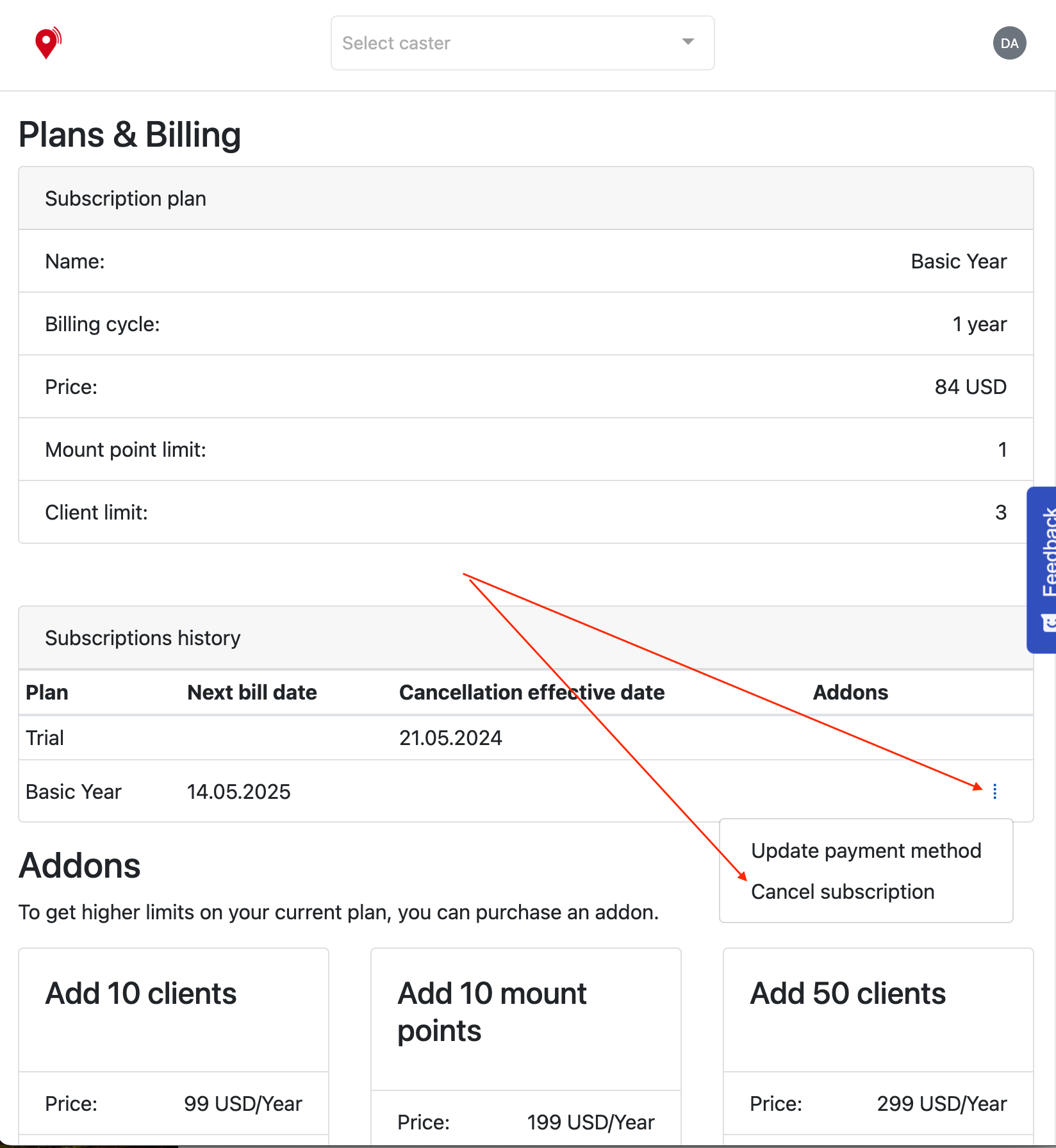

RE: Stop Youcors Subscriptionposted in YouCORS

@kalibaru-survey Hi

You can cancel your subscription on Plans & Billing page. Click details menu on current subscription item.